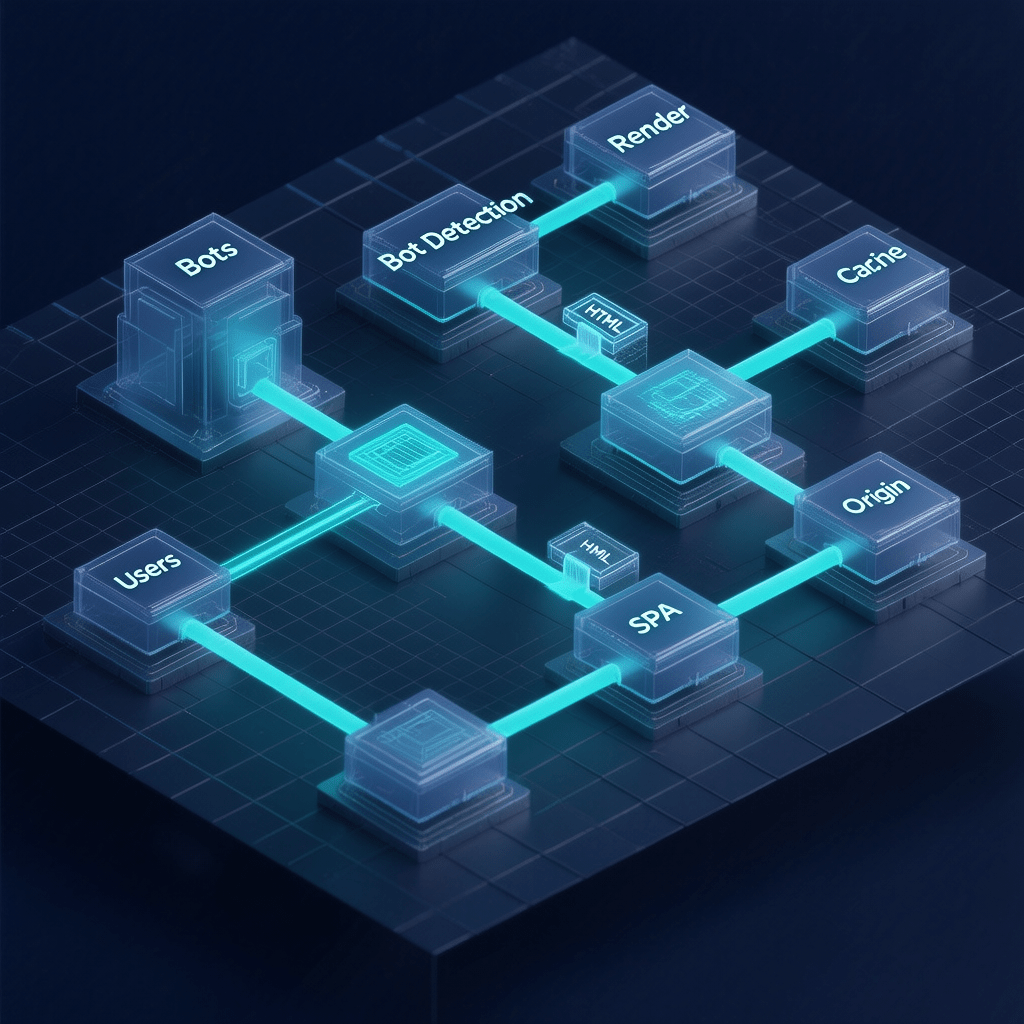

Feature image: dynamic rendering for Googlebot and users routed through an edge proxy with caching. All images on this page are compressed for fast loading.

Dynamic Rendering for Googlebot and Users: 21 Proven Wins

By Morne de Heer · Published by Brand Nexus Studios

If your JavaScript app loads beautifully for people but rarely lands in the index, dynamic rendering for Googlebot and users can bridge the gap. It serves pre-rendered HTML to crawlers and link preview bots while keeping the app-first experience for humans.

Right away, dynamic rendering for Googlebot and users provides crawlable content, reliable meta tags, and consistent social previews. You get practical SEO wins without a complete rebuild, and you can shape a migration path to server rendering later.

Because search engines evolve fast, you need a solution that honors current guidance and still moves the needle. With careful scoping, caching, and validation, dynamic rendering for Googlebot and users becomes a safe, results-focused tactic rather than a crutch.

In this guide, you will learn what dynamic rendering for Googlebot and users is, when to use it, how it works end to end, and how to measure the impact. You will also get code patterns, caching strategy, and 21 field-tested wins you can implement now.

What is dynamic rendering and why it matters

At its core, dynamic rendering for Googlebot and users is selective prerendering. Bots receive fully rendered HTML that is ready to crawl. People receive your standard SPA or hybrid framework output with client-side interactivity intact.

The magic comes from routing. A lightweight function inspects each request and decides whether to serve prerendered HTML or the normal app. With the right cache rules, dynamic rendering for Googlebot and users keeps TTFB low and scales during traffic spikes.

Modern guidance frames dynamic rendering as a stopgap, not a forever solution. That is a fair stance. Yet many teams need results now. When implemented with clean boundaries, dynamic rendering for Googlebot and users delivers real SEO gains while you advance toward SSR or static generation.

When to use dynamic rendering for Googlebot and users

Use dynamic rendering for Googlebot and users when a JavaScript-first site struggles to expose critical content to crawlers. Think product detail pages, documentation, marketplaces, and UGC routes that depend on API-driven content.

It also helps when accurate link previews drive growth. Messaging apps and social networks use their own link expanders, and dynamic rendering for Googlebot and users ensures Open Graph and Twitter Cards resolve with the correct title, description, and image.

Finally, use it during migrations. If SSR or static generation is on your roadmap, dynamic rendering for Googlebot and users keeps rankings steady while you de-risk the transition in phases.

How the architecture works

The flow is straightforward. Your edge function intercepts traffic, checks for eligible bots, and then serves either cached prerendered HTML or the original app. With simple rules, dynamic rendering for Googlebot and users becomes predictable and easy to maintain.

- Detect eligible crawlers and link preview agents by user agent and, if needed, reverse DNS for Googlebot.

- Confirm the request is GET and accepts text/html before dynamic rendering for Googlebot and users kicks in.

- Look for a cached snapshot. If found, return it with proper cache headers.

- If missing, call a renderer, sanitize the HTML, cache it, and respond.

- Serve the standard client-side app to humans with no changes.

Code pattern for dynamic rendering

Below is a minimal router you can adapt. It illustrates where dynamic rendering for Googlebot and users fits in the request lifecycle. Expand it with stricter validation, timeouts, and error handling.

export default {

async fetch(request, env, ctx) {

const url = new URL(request.url);

const ua = request.headers.get("User-Agent") || "";

const accept = request.headers.get("Accept") || "";

const isHtml = accept.includes("text/html");

const isGet = request.method === "GET";

const isEligibleBot = /(googlebot|bingbot|duckduckbot|baiduspider|yandex|slurp|facebookexternalhit|twitterbot|linkedinbot|discord|whatsapp|slackbot)/i.test(ua);

if (!(isGet && isHtml && isEligibleBot)) {

return fetch(request);

}

const cacheKey = new Request(url.toString(), { method: "GET", headers: { "Accept": "text/html" } });

const cache = caches.default;

let cached = await cache.match(cacheKey);

if (cached) return cached;

const renderer = `https://renderer.example.com/render?url=${encodeURIComponent(url.toString())}`;

const resp = await fetch(renderer, { headers: { "User-Agent": ua }, cf: { cacheTtl: 0 }});

if (!resp.ok) return fetch(request);

const html = await resp.text();

const toCache = new Response(html, {

status: 200,

headers: {

"Content-Type": "text/html; charset=utf-8",

"Cache-Control": "public, max-age=600, s-maxage=600, stale-while-revalidate=86400",

"Vary": "Accept-Encoding, User-Agent",

}

});

ctx.waitUntil(cache.put(cacheKey, toCache.clone()));

return toCache;

}

};

21 proven wins with dynamic rendering for Googlebot and users

- Instantly crawlable HTML for routes that previously required JavaScript hydration.

- Higher index coverage on deep category and pagination pages.

- Stable Open Graph and Twitter Cards for consistent social previews.

- Lower TTFB for bots thanks to cached snapshots at the edge.

- Reduced soft 404s where content was delayed or hidden behind scripts.

- Cleaner link equity through reliable canonicals and hreflang tags.

- Improved structured data detection with fewer rendering errors.

- Safer migrations while you roll out SSR or static generation by section.

- Predictable social link expanders across Slack, WhatsApp, X, and LinkedIn.

- Lower origin load when viral shares drive bot traffic and previews.

- Fewer JavaScript-induced crawl anomalies that derail QA.

- Resilience with stale-while-revalidate during renderer blips.

- Granular control by route and bot type without app rewrites.

- Better analytics fidelity since bots no longer execute app code.

- Faster experimentation on head tags and preview images.

- Clear rollback path if any issue appears in production.

- Compatibility with all major frameworks and build tools.

- Edge-first privacy by excluding authenticated and sensitive pages.

- Hands-off previews for marketing teams sharing URLs across channels.

- Measurable ranking improvements on content that needs quick indexing.

- A practical bridge from today’s SPA to tomorrow’s SSR-first architecture.

Use this list as a playbook and prioritize what matters most to your funnel. With disciplined scoping, dynamic rendering for Googlebot and users can deliver these gains without adding risk to your roadmap.

SEO requirements to lock in

Great HTML snapshots are not enough. For durable results, dynamic rendering for Googlebot and users must output clean, consistent SEO signals that match your origin content.

- Set unique titles and meta descriptions that mirror on-page content.

- Render canonical tags, hreflang where relevant, and robots directives explicitly.

- Output Open Graph and Twitter Card tags with accurate images and alt text.

- Include JSON-LD structured data for articles, products, or FAQs.

- Render primary content in the DOM, not just placeholders.

One more tip. Keep internal linking logic intact in snapshots. Dynamic rendering for Googlebot and users should never hide navigation or related links that support crawl paths.

Bot detection and safe routing

Bot detection is the gatekeeper. A narrow allowlist keeps dynamic rendering for Googlebot and users precise and audit-friendly. Avoid broad UA rules that accidentally include real visitors.

- Googlebot and the URL Inspection tool agent for validation.

- Bingbot and DuckDuckBot where relevant to your markets.

- FacebookExternalHit, Twitterbot, LinkedInBot, Discord, WhatsApp, Slack link expanders.

For sensitive flows, verify Googlebot using reverse DNS. This extra step strengthens trust in dynamic rendering for Googlebot and users when rankings are on the line.

Caching strategy that makes this fast

Caching is where the speed comes from. Without it, dynamic rendering for Googlebot and users would feel slow and expensive. With it, your snapshots fly and your origin stays calm under load.

- Evergreen pages: 1 to 24 hours with stale-while-revalidate of 1 day.

- News and feeds: 5 to 15 minutes with prewarming on publish.

- Product pages: 15 to 60 minutes, tuned for inventory and price volatility.

Attach ETag and Last-Modified headers and respect If-None-Match when your renderer supports it. With a strong cache plan, dynamic rendering for Googlebot and users maintains low TTFB worldwide.

Page speed, compression, and media handling

Even for bots, performance signals matter. When you implement dynamic rendering for Googlebot and users, compress HTML, minify critical CSS, and strip unused scripts from snapshots.

- Compress images with modern formats and responsive sizes.

- Lazy load non-critical assets for human views, not for bot snapshots.

- Use CDN caching and brotli or gzip for HTML and JSON payloads.

Call it out in your SOPs. State clearly that images are compressed and that caching is active. This keeps dynamic rendering for Googlebot and users predictable and easy to review.

Security and reliability practices

Treat your router as a production app. A few guardrails turn dynamic rendering for Googlebot and users into a safe, resilient layer instead of a brittle workaround.

- Time out render calls after 5 to 10 seconds and fall back to cache.

- Limit HTML size and block unsafe query patterns to prevent abuse.

- Exclude authenticated and transactional routes from snapshots.

- Sanitize third-party scripts before caching.

- Log decisions and errors with request IDs for quick triage.

Testing workflow that catches issues early

Do not ship blind. Validation proves that dynamic rendering for Googlebot and users outputs the right HTML and keeps human UX intact.

- Use curl to fetch HTML with a Googlebot user agent and inspect the markup.

- Validate titles, meta tags, canonicals, and structured data are complete.

- Paste URLs into Slack, WhatsApp, X, and LinkedIn to verify link previews.

- Run URL Inspection to confirm the crawled HTML matches your snapshot.

- Compare TTFB and cache hit rate across regions after deployment.

Monitoring and KPIs

Track what matters so you can prove ROI. When dynamic rendering for Googlebot and users is working, you will see crawl and speed metrics move in the right direction.

- Index coverage for JS-heavy routes.

- TTFB for bot requests and cache hit ratio by route.

- Open Graph preview accuracy across major platforms.

- Structured data detection and rich result eligibility.

Need help connecting SEO, UX, and revenue metrics end to end? Explore our analytics and reporting approach to keep your improvements measurable and aligned.

Migration path beyond dynamic rendering

Think of dynamic rendering for Googlebot and users as a bridge, not the destination. As your team adopts server rendering or static generation, reduce scope and rely on snapshots only where needed.

- Move top templates to SSR or ISG first to stabilize the core journey.

- Keep dynamic rendering for long-tail or rarely visited routes.

- When coverage is strong, retire the renderer and keep the cache layer.

This play keeps momentum high and risk low. It also aligns with long-term guidance while protecting short-term growth.

Strategic content and internal linking

Technical rendering is one lever. Content and internal linking still decide how authority flows. Dynamic rendering for Googlebot and users works best when paired with a clean architecture.

- Use descriptive anchor text, not generic clicks.

- Cluster related content and connect hubs to spokes.

- Keep sitemaps updated and ping major search engines after publish.

If you want hands-on support from specialists who blend code with content, our SEO services team can help plan, implement, and measure the rollout.

Design and DX considerations

Rendering changes should not erode design quality or developer experience. When done right, dynamic rendering for Googlebot and users sits quietly behind your stack and empowers faster iteration.

- Design systems should include accessible fallbacks and semantic HTML.

- Developers should ship predictable head tags via a common utility.

- Deploy pipelines should prewarm cache on publish for key routes.

If you are planning a rebuild, our website design and development team can bake performance and SEO into your system from day one.

Advanced techniques for accuracy and scale

At scale, the details matter. The following patterns make dynamic rendering for Googlebot and users more robust across regions and traffic patterns.

Reverse DNS validation for critical bots

For Googlebot on high stakes pages, resolve IPs to confirm ownership. This makes dynamic rendering for Googlebot and users safer when bad actors spoof user agents.

Route-level TTLs and invalidation

Use shorter TTLs on feeds and longer TTLs on evergreen guides. Invalidate on publish or price change events. With this nuance, dynamic rendering for Googlebot and users stays fresh without churn.

HTML sanitization

Strip analytics scripts and third-party tags from snapshots. People still load them later. For bots, dynamic rendering for Googlebot and users should present clean, content-first HTML.

Prewarming and edge KV

Prewarm new routes and store last-known-good snapshots. If rendering fails, dynamic rendering for Googlebot and users still serves cached HTML while a background refresh runs.

Common pitfalls to avoid

Most failures are self-inflicted. Keep dynamic rendering for Googlebot and users on the rails by avoiding these mistakes.

- Prerendering everything, including human sessions and private pages.

- No cache plan, leading to slow TTFB and rising compute cost.

- Forgetting canonical tags or shipping conflicting robots directives.

- Allowing UA rules to match human visitors accidentally.

- Not documenting scope and change control for the bot allowlist.

Governance and documentation

Write down what you render, who gets it, and why. Clear documentation makes dynamic rendering for Googlebot and users explainable to stakeholders and easy to audit later.

- Maintain a versioned bot allowlist with change history.

- Record cache TTLs by route and conditions for invalidation.

- Keep test scripts for UA simulation and HTML comparison.

Measurement playbook

Proving value builds momentum. After rollout, show how dynamic rendering for Googlebot and users impacts crawling, indexing, and conversions that follow.

- Baseline index coverage, impressions, and clicks before changes.

- Track cache hit ratio and bot TTFB after rollout.

- Monitor preview accuracy on top channels that drive shares.

- Report wins monthly and adjust scope based on data.

For a cross functional approach, our analytics and reporting crew can connect SEO, CRO, and revenue signals so you prioritize with clarity.

Frequently asked questions

Here are quick answers to common questions about dynamic rendering for Googlebot and users, plus tips to keep your rollout smooth.

What is dynamic rendering for Googlebot and users?

It is a selective prerendering strategy where bots receive fully rendered HTML and people see your normal app. Dynamic rendering for Googlebot and users improves crawlability, previews, and structured data consistency.

Is this a permanent solution?

No. Treat dynamic rendering for Googlebot and users as a bridge. Long term, aim for SSR, static generation, or hydration that exposes crawlable HTML by default.

Which bots should I include?

Start with Googlebot, Bingbot, and major social link expanders. Expand slowly and document decisions. This keeps dynamic rendering for Googlebot and users predictable.

How do I handle personalization?

Exclude authenticated and personalized routes. Snapshots should never include sensitive data. In practice, dynamic rendering for Googlebot and users targets public, content heavy pages.

How do I test quickly?

Use curl with a Googlebot UA, inspect HTML, and compare to a normal request. Validate in Search Console. Confirm Open Graph and Twitter Cards are correct in real previews.

What metrics prove success?

Index coverage, cache hit ratio, bot TTFB, and preview accuracy. If these improve, dynamic rendering for Googlebot and users is doing its job.

Can I keep using it after SSR?

Sometimes yes for edge cases. However, as SSR coverage grows, reduce your dynamic rendering footprint until it is no longer needed.

Conclusion

You do not need a full rebuild to become crawlable. With careful routing, caching, and QA, dynamic rendering for Googlebot and users can unlock visibility now while you evolve toward SSR or static generation.

If you want expert guidance to scope and ship this approach with confidence, the team behind our SEO services can help you align technical foundations with growth.

References